Bias and Variance is the common words which we will hear in Machine Learning.

Bias:

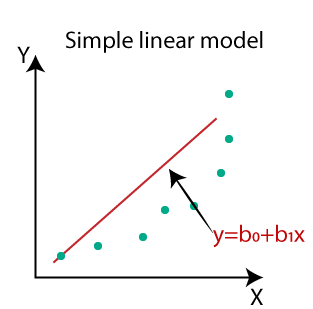

we will see this issue more in parameter Machine Learning algorithms, because most of the parameter algorithms are liner/polynomial, so It will not touch/read all data points, so we will have more error, which leads to under fitting.If you see below image, the linear line didn’t touch all the data points, means we will definitely have some error/Bias.

Example : Liner regression, Logistic regression etc…

Variance:

We will see this issue more in non parameter Machine learning algorithms,because those are non linear will learn every thing about data(means touch each and every data point), So we will have very high variance which will leads to over fitting.

Example: Decision trees, K nearest neighbor etc..

Trade off:

The way how we overcome the High Bias/Low Variance and Low Bias/High Variance is called Trade off.

For example:

when we talk about decision tress they are by default overfit/ High Variance, the trade off will be pruning the tree, For K-NN we need to select the best K value to trade off overfit/ High Variance.

When we talk about Liner regression it might lead to underfit, so the trade off will be controlling the Bias( y = mx+c, we need to control C)

Note:

Increasing the bias will decrease the variance.

Increasing the variance will decrease the bias.

Overfitting: Good performance on the training data, poor generalization to other data.

Underfitting: Poor performance on the training data and poor generalization to other data.

So trade off will be in between Variance and Bias.