When I ask what are the types of regression for the beginners, the expected answer will be linear regression and Logistic regression, because these are the two algorithms that all beginners will start with.

Now coming to the point we have five types of regression.

- Linear regression

- Logistic regression

- Polynomial regression

- Ridge Regression

- Lasso Regression

Linear/logistic regression

Linear regression is one of the most used in the field of analytics since decades.Again regression is divided in two types simple and multi regression.When we have one input/independent variable we called it simple regression when have more than on input/independent variable we will call it multi regression.In general we will have more use cases in multi regression scenarios.

Important points to consider:

- linear regression is very sensitive to outliers

- accuracy will affect if we have multi collinearity issues

- This is fast when the relationship is not to complex and with less data

- Very impotent when the data is non linear linear regression results leads to under fit.

Polynomial regression:

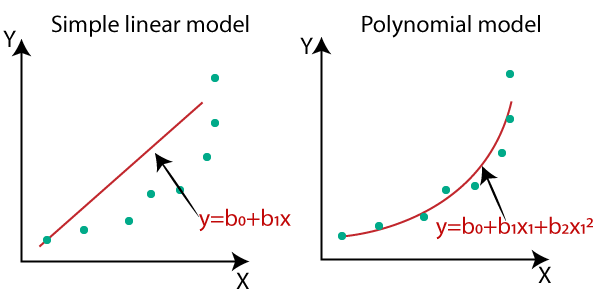

This is the best algorithm to use when we have non linear relationship, in this regression best fit line is not straight line it is curved in shape, which will touch most of the data points.

Important points to consider:

- Its easily prone to over fit where linear regression is prone to under fit.

- It will work very well of the relation is non linear.

- We need to lots of understanding about data to set best exponents.

Visual difference between polynomial and Linear

Ridge Regression:

If we have high collinearity among the feature/independent variables linear and polynomial regression will fail (wont work well in terms for predictions), in this case we will go for Ridge Regression.

We can also called Ridge regression also called L2 regularization(adding penalty to the coefficients will discuss more in future articles ). Regularization is used to overcome the over fitting problems, may be we can also called trade off.

It uses Shirking parameter to over come the multicollinearity problem. Shrinkage is where extreme values in a sample are “shrunk” towards a central value, like the sample mean. It is also used to avoid the sampling and non sampling error.

Lasso Regression:

I can say Rigid regression + variable Selection(It shrinks coefficients to zero (exactly zero), which certainly helps in feature selection, if zero then it will not use the variable for model building). It is also called L1 regularization.

Elastic Regression:

It is the combination of Lasso regression + Rigid regression.

Which regression model I should use.

Yes this is very important, we can choose the best model only if we can understand our data very well, So we need focus more and more on Data exploration.

For example: if the data is linearly related then I can use Linear regression if not than I can use polynomial regression. if my data has lots of input/independent variables and multicollinearity than I can think about Lasso or Rigid or Elastic regression.

Note:

Collinearity means lets say if I have two variables which carry information about the height of the person with inch in one variables and height of the same person in centimeters in another variables, these issues are called collinearity issues.To identify these in our variables we can use correlation matrix or heat maps.