we already discussed how to calculate accuracy for linear regression with the help of R-Square, Adjusted R-Square, MSE etc..Can we use the same mechanism to calculate the accuracy for classification problem? Simple answer is NO, we have different mechanism to calculate accuracy for classification problems.

Classification Accuracy:

It is very simple to calculate, number of correct predictions made divided by total number of observation.

Lets calculate the accuracy with an example: We have 15 observations 10 of them are True and 5 of them are False.

For True our model predicated 8 correctly and 2 wrongly, for False 4 correctly and 1 wrong.So here total correct predication is 12(Including True and False) out of 15.

So accuracy will be 12/15 = 0.8 means 80% it correctly predicted.

Confusion Matrix:

seriously!! don’t get confused confusion matrix is very easy to understand.Confusion matrix can be explained in four parts as shown below.

Now discuss what is True/False Positives/Negatives.

True Positive: If actual results and predicted results are Positive

True Negative:If actual result and predicted are Negative

False Positive:If actual result is Negative and predicted results as Positive (Type I error)

False Negative:IF actual result is Positive but predicted as Negative (Type II error)

Now lets fill our predicted values as discussed in classification accuracy.

We have two important calculations to be calculated called Precision and Recall

Precision:

proportion of correct positive results out of all predicted positive results

precision = 8/8+1 = 8/9 = 0.889 = 88.9%

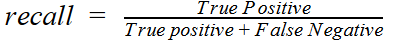

Recall:

Proportion of actual positive cases.

recall = 8/8+2 = 8/10 = 0.8 = 80%

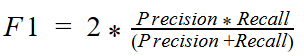

F1 score:

F1 score is one of the metric used to calculate the accuracy for classification like R-Square in linear regression. It will be always good if we have one parameter(F1 score rather than two in our case Precision and Recall) to consider for accuracy, So average of Precision and Recall is called F1 score.

We use harmonic mean to calculate the F1 score.

If we substitute the values we calculated for Precision and Recall F1 score will be 0.84 or 84%.

Why Harmonic mean? because we are taking the averages of percentage.For more information about Harmonic mean refer this site.

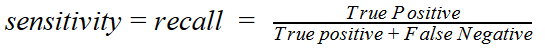

Sensitivity and Specificity:

Sensitivity is also called True positive rate which is also called Recall, which we already calculated.

It says how many positive is correctly predicted.Highly sensitivity means all Trues are correctly predicted, In our case 80% of the True is correctly predicted and 20% are wrongly predicted.

Sensitivity = 8/8+2 = 8/10 = 0.8 = 80%

It says how many negative is correctly predicted.Highly Specificity means all False are correctly predicted.

specificity = 4/4+1 = 0.8 means 80% it predicted the False values correctly.

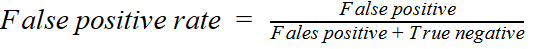

False Positive Rate:

False positive rate can also be 1 − specificity, we can also use below formula

FPR = 1/1+4 = 0.2 =20% means 20% of the predicted the False are incorrectly.

As we discussed False positive rate can also be calculate by 1-specificity. We calculated the value of specificity above is 0.8/80% so FPR = 1-0.8 = 0.2/20%

Receiver operating characteristic (ROC) curve:

An ROC curve plots the true positive rate/Sensitivity on the y-axis versus the false positive rate/Specificity on the x-axis.

Area under the curve (AUC):

It is one of the metric to calculate the overall performance of a classification model based on area under the ROC curve.